XMODEM in 2022

The year is 2022.

How did I find myself writing a new implementation of a 45-year old protocol?

Using XMODEM for file transfers

Let's start at the beginning: it all started with me trying to boot a system which receives its initial ramdisk over XMODEM.

XMODEM is a very simple protocol for sending data over a serial link. It's a way to transmit files from a host (which is sending the file) to a device (which receives the file).

In this case, the host is my laptop, and the device is an Oxide Computer Company server sled.

In an XMODEM transfer, the target file is broken up into chunks of a particular size and sent over the serial link to the device. After each chunk, the device replies with an acknowledgement.

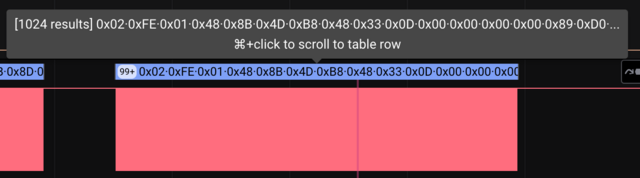

Our system is running in 1024-byte mode with 16-bit CRC checksums. Each packet is therefore 1029 bytes:

0x02(STX), indicating that we're sending a 1K block- Block number, starting at 1 (1 byte)

- 255 - block number (1 byte, used as a primitive checksum)

- 1024 bytes of data from the file

- CRC checksum (2 bytes)

This is followed by a single-byte reply from the receiver:

0x06(ACK) indicating that the packet was received, or0x15(NAK) if something went wrong.

Using XMODEM makes the device bootloader simple, and it's an old enough standard that

it's well-supported everywhere. It's also stable: the recommended tool

for loading the bootloader is lrzsz's sx executable,

which was last updated in 1998 (and is therefore old enough to drink).

Getting started

In theory, it should be simple:

- Connect the host and device with a serial cable

- Open a serial terminal on the host (e.g.

picocom) - Use the bootloader's command-line interface to switch into receive mode

- Transfer the ramdisk file with

sx(the XMODEM sender fromlrzsz).

My colleagues using Linux (and occasionally illumos) had been booting systems in this way for months; I was the first to attempt the process on macOS.

As you may have guessed, it didn't work.

The ramdisk is 64 MB and the serial link is running at 3 MBaud, which means you'd expect a transfer time of roughly 4 minutes:

$$

\sf

\frac{10\ \frac{\textsf{bits}}{\textsf{byte}}}{3000000\ \frac{\textsf{bits}}{\textsf{sec}}}

\times

64\ \textsf{megabytes} = 223\ \textsf{secs} = 3.7\ \textsf{mins}

$$

(Each byte takes 10 bits because there's a start and stop bit; this

analysis ignores the overhead due to XMODEM framing and ACKs)

I was seeing performance that trended towards about 20 minutes – and more importantly, it was hanging partway through the process.

Xmodem sectors/kbytes sent: 53168/6646kRetry 0: Timeout on sector ACK

Xmodem sectors/kbytes sent: 53176/6647kRetry 0: Cancelled

Transfer incomplete

*** exit status: 128 ***

Sometimes it would make it halfway; other times, it would fail after only a few megabytes, but it never succeeded in sending all of the data.

Failing faster

The first order of business was to figure out why my system was much slower than the theoretical performance. In short, this was due to implementation details of our USB-to-serial converter chip!

Taking a step back, since very few computers have hardware serial ports in the modern era, we're using FTDI's USB-to-serial modules. These chips have the notion of a "latency timer", which determines how long the chip waits before sending an incomplete packet. By default, this parameter is 16 milliseconds.

XMODEM breaks the data into 65536 packets of 1024 bytes, and reads

a single byte (ACK) from the target after each packet. The internal packet buffer

is larger than 1 byte, so the chip waits for the latency timer period before

transfering this ACK to the host. This adds 65536 × 16 milliseconds = 17

minutes, which matches the performance I saw!

On Linux, this can be adjusted by writing to the sysfs:

echo 1 > /sys/devices/pci0000:00/.../usb1/1-3/1-3:1.0/ttyUSB0/latency_timer

My colleagues on Linux were adjusting the latency timer before sending the ramdisk image, and seeing much more reasonable transfer times.

On macOS, there's no such functionality. As it turns out, I'm not the first

person to find

this

issue,

but FTDI's response leaves something to be desired.

In the past, folks were advised to unpack the .kext file and

manually edit an XML file

(😱) to change these parameters.

However, with the rise of signed drivers,

this hack is no longer possible!

In their final message, FTDI effectively shrugs and says "buy a signing certificate from Apple or go look at our low-level drivers, good luck!".

I didn't want to buy a signing certificate, and definitely didn't want to use FTDI's drivers, so I decided to look elsewhere. Linux is open source (surprise!), so we can see exactly what the aforementioned command is doing:

static int write_latency_timer(struct usb_serial_port *port)

{

/* ... setup elided ... */

rv = usb_control_msg(udev,

usb_sndctrlpipe(udev, 0),

FTDI_SIO_SET_LATENCY_TIMER_REQUEST,

FTDI_SIO_SET_LATENCY_TIMER_REQUEST_TYPE,

l, priv->interface,

NULL, 0, WDR_TIMEOUT);

/* ... */

}

It's just a USB control transfer! We can do that!

Doing the raw transfer myself proved unnecessary:

libftdi

provides a C API, and is available in Homebrew.

(Looking at the code, libftdi is just libusb in a trench coat, but I

appreciate the slightly higher level of abstraction!)

97 lines of C later, I had a program which would adjust the latency timer.

When I ran this program before starting the transfer, it went much faster – and still failed consistently.

The first rule of debugging embedded communications

At this point, I admitted (to myself) that I had failed to follow the first rule of debugging embedded communications problems:

If something is going wrong, stop messing with software and put a logic analyzer on the communication lines.

It's tempting to skip this step, because poking at software is easier, but seeing the actual signals is almost always the best way to understand what's going on.

I unpacked my Saleae logic analyzer and soldered a few wires to the serial adapter. The serial link includes four lines (plus ground):

TXis data sent from the host to the deviceRXis data sent from the device to the hostRTSindicates that the host is ready to receive dataCTSindicates that the device is ready to receive data

The latter two signals are used for hardware flow control.

In our traces, RTS and CTS are always low, because the devices are

processing data fast enough to avoid needing flow control.

With everything wired up, I captured an attempted upload while recording all four serial lines. As a reminder, here's what we expect to see in a single 1029-byte packet:

0x02(STX), indicating that we're sending a 1K block- Block number, starting at 1 (1 byte)

- 255 - block number (1 byte, used as a primitive checksum)

- 1024 bytes of data

- CRC checksum (2 bytes)

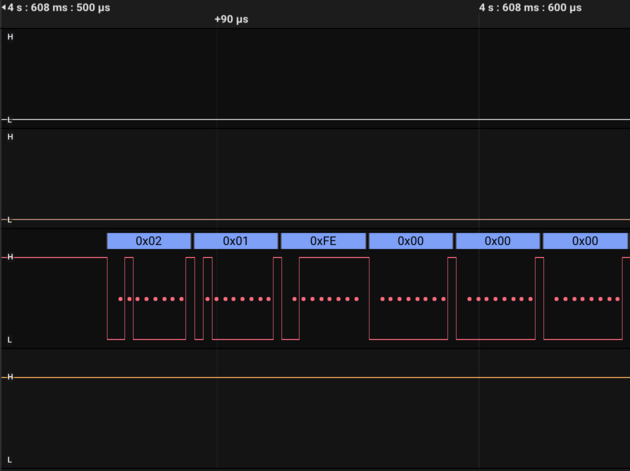

In the logic analyzer's trace, we can see the start of the first block:

As expected, the packet starts with 0x02, 0x01, 0xFE, then begins

sending our data (which is all zeros).

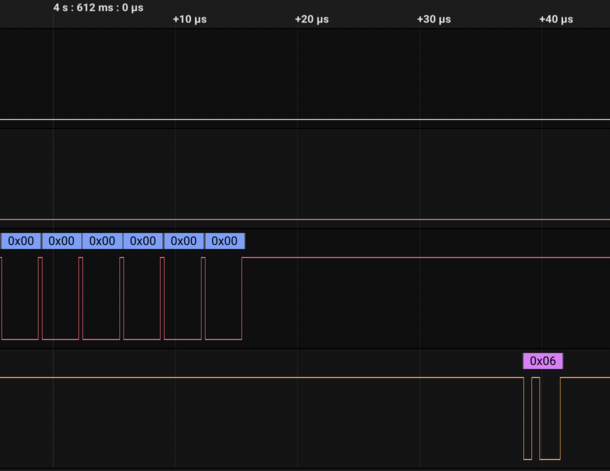

Scrolling past the data, we see the end of the block and an ACK (0x06)

from the target device:

(Since the first block is entirely 0, the two-byte checksum is also 0)

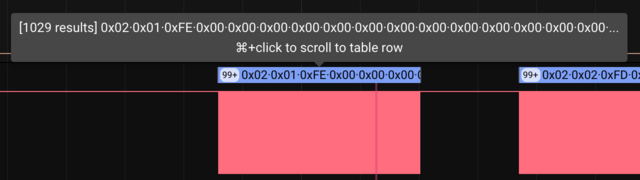

Zooming out, we see our expected 1029-byte packet:

Now, let's scroll all the way to the end of the transmission,

where sx eventually hangs with a timeout.

What do we have here?

Well, there's your problem: my computer stops sending bytes before reaching the end of the packet! The bootloader is exonerated: it politely waits 60 seconds, then declares a timeout and drops back into its command-line interface.

At this point, the issue has been isolated to the sending side of the system:

picocominvokessxto send a filesxwrites bytes to/dev/tty.usbserial-FT51SYUL- macOS sends those bytes to the FTDI chip

Because my colleagues are using the same versions of picocom and sx

(on Linux instead of macOS), I mostly trust those two layers of the stack.

The macOS driver is a black box, so I trust it a little less.

The error message from sx also hints that the OS driver is the issue:

Xmodem sectors/kbytes sent: 53168/6646kRetry 0: Timeout on sector ACK

This suggests that sx has sent all of its bytes and is waiting for the target

device to send an ACK (0x06). However, the captured data shows that

not all of the bytes have actually gone down the wire!

Sidestepping the macOS driver

After all of this debugging, the actual resolution is somewhat anticlimactic.

Remember libftdi from earlier, which I used to adjust the latency timer?

It turns out that it also exposes functions to send and receive bytes directly, which lets you sidestep the macOS driver entirely!

Using the ftdi bindings, I wrote a

minimal XMODEM sender

in 143 lines of Rust,

including CRC code copied out of lrzsz.

This system worked reliably, sending the entire ramdisk with similar speeds as we were seeing on Linux.

The one downside is that you can't use this as a sender from picocom, since

the USB device has to be released.

(This is a slightly confounder to the results, since it means picocom could

have been the source of my original issue; it's unlikely, because my colleagues

were using the same version. To completely isolate the issue, I'd need to test

using sx without picocom, which I didn't think of at the time)

Polishing and releasing

If you find yourself in a similar situation, I've released rfsx on Github.

It's a bit more polished than the Gist above: I discovered the

xmodem.rs crate,

which implements the core protocol and let me delete a lot of code.

The xmodem.rs API accepts a handle to a Read + Write object,

and does everything else automatically!

(I'm using the oxidecomputer/xmodem.rs

fork, which has been maintained by colleagues, rather than the version

from crates.io)

I also added a snazzy progress bar, command-line flags to select an FTDI device by VID / PID, and a dummy mode to run without an actual serial link.

Thanks to Keith, Robert, and Cliff for helping debug this issue, and to Manuel for feedback on this blog post!

If you enjoy this kind of debugging story, also check out "More Tales from the Bringup Lab" from the Oxide and Friends podcast.